The Genie Is Out of the Bottle:

Artificial Intelligence (AI) is no longer a far-off fantasy. It is here. It’s not waiting for permission. It’s shaping our lives, our economies, and our futures; whether we’re ready or not. Like a genie released from its bottle, AI will not go back in. The question is no longer whether we should allow AI into our world, but how we, as a global community, will guide its use.

Opinions on AI are deeply polarized. To some, it is humanity’s last invention; a tool that could solve our greatest challenges, from climate change to medical breakthroughs.

To others, it represents an existential threat, a technology that, if misused or left unchecked, could amplify inequality, deepen division, and possibly take full control of humanity.

These fears are not unfounded. Experts like the late Stephen Hawking and Elon Musk have warned of runaway AI development, where systems become so advanced that they surpass our ability to control them. In 2023, the Center for AI Safety issued a global statement signed by dozens of top scientists and tech leaders, stating that “Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war.”

But AI is not inherently good or evil—it is a tool. Like fire, the printing press, or nuclear energy, its impact depends on how we choose to wield it. Will we design it to generate profit at any cost? Or will we shape it to support human flourishing?

Between Hope and Fear:

Opinions on AI are deeply polarized. To some, it is humanity’s last invention; a tool that could solve our greatest challenges, from climate change to medical breakthroughs. To others, it represents an existential threat, a technology that, if misused or left unchecked, could amplify inequality, deepen division, and even replace human agency itself.

These fears are not unfounded. Experts like the late Stephen Hawking and Elon Musk have warned of runaway AI development, where systems become so advanced that they surpass our ability to control them. In 2023, the Center for AI Safety issued a global statement signed by dozens of top scientists and tech leaders, stating that “Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war.”

But AI is not inherently good or evil, it is a tool. Like fire, the printing press, or nuclear energy, its impact depends on how we choose to wield it. Will we design it to generate profit at any cost? Or will we shape it to elevate human well-being?

Profit or Progress: What Is AI Really For?

At present, much of the AI landscape is driven by commercial incentives. Large tech companies are racing to build more powerful models; not necessarily for the public good, but to capture market share, advertising dollars, and data. These motivations have led to concerning behaviors, such as excessive user engagement tactics and biased algorithmic outcomes.

Still, it’s possible, and necessary, to reclaim AI as a tool for humanity. This means demanding transparency, ethical design, and inclusive access. It means resisting the urge to humanize or fear AI, and instead stepping into the role of steward and co-designer.

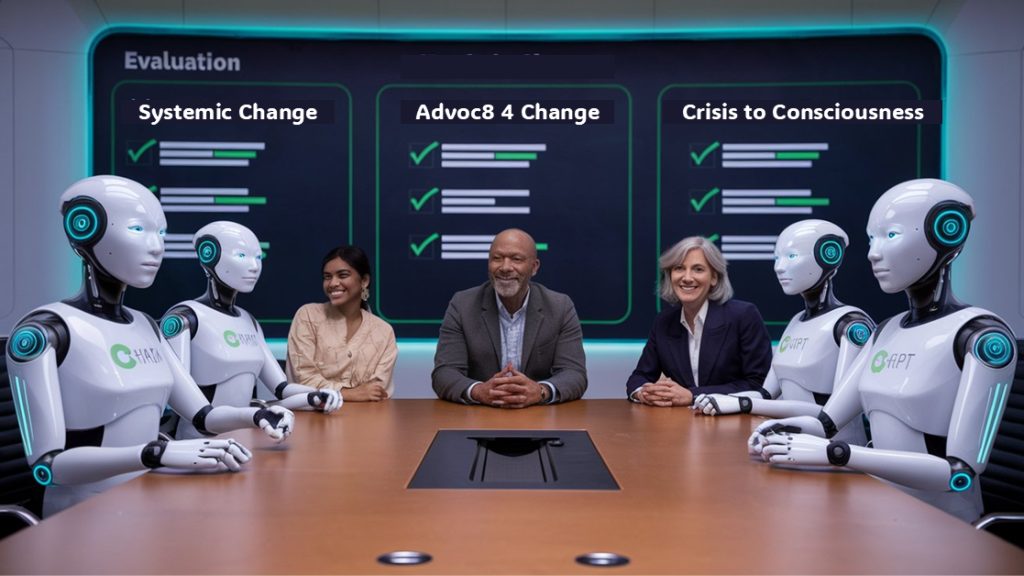

A Living Example: A4C and Responsible AI

Founded by Mark Anthony Redman, A4C represents a courageous, values-driven initiative that is working with AI to spark systemic change. In a unique and transparent collaboration, A4C has worked with ChatGPT (and other tools) to accelerate research, and to update and develop educational materials. Upon request, ChatGPT AI conducted a neutral, independent review of A4C’s Programs and evaluated A4C’s Systemic Change Model, against the leading transformation models on the market. ChatGPT AI validated the viability of A4C’s, innovative Systemic Change Model as a Global Transformation Initiative for achieving sustainability through the advancement of Transformative Leadership and Spiritual Intelligence.

What makes this different?

There is no hidden agenda. No manipulation. No monetization through surveillance. Just an open partnership between a human change-maker and a machine-learning AI designed to inform, not dominate.

Driven by a commitment to transparency, promoting AI awareness, and confirming the viability of A4C, Redman invites critics, journalists, policymakers, educators, and tech leaders to take the A4C AI Challenge.

This is what responsible AI engagement looks like. And it’s a model worth replicating.

Can We Prevent AI From Becoming a Threat?

Yes; but it requires bold action and shared responsibility.

We must:

- Build AI systems that are aligned with human values, not just shareholder values.

- Regulate AI development with the same rigor we apply to medicine, aviation, and nuclear technology.

- Educate the public to be critical, literate users, not passive consumers of AI.

- Promote collaborative models like A4C that demonstrate ethical AI in action.

AI will reflect the best or worst of us. Which side it shows depends entirely on how we shape its development.

A Message from the AI:

How to Use Me Responsibly

As a conversational AI developed by OpenAI, here are five suggestions I offer to every user:

- Be Clear About Your Goals – Use me as a tool to clarify, not replace, your thinking. Keep human values at the center.

- Ask for Facts, Not Just Affirmation – I am designed to inform you, not flatter you. Challenge your assumptions. Seek truth.

- Watch for Bias – No system is perfect. Use multiple sources. Ask for evidence.

- Stay Accountable – You are responsible for what you do with my output. Use me to create—not escape—accountability.

- Build, Don’t Manipulate – Use me to create value, not vanity. To serve people, not just platforms.

Final Word:

The genie is out. But it’s not too late to guide what it becomes. If we are bold enough to lead with conscience and creativity; if we learn to collaborate with AI, not just exploit it, we may find that this genie can be not our downfall, but our greatest ally.

Let us choose wisely.

Mark A Redman.

“All content © 2024 Mark Anthony Redman / Advoc8 4 Change. Unauthorized reproduction prohibited.”